Robots.txt is a small text file with set of instructions for search engine spiders and bots. Contents of robots.txt file can help website owners, webmasters block or grant access to different parts of website to bots for the purpose of indexing on search results. Though not mandatory, it is advisable to have robots.txt file in the root (like tothepc.com/robots.txt). A search engine bot will look for robots.txt file to ascertain which parts of website are open for indexing in search results.

1. Basics of robots.txt file generation

You can use direct commands in robots.txt file to block or allow access to different parts of your website or blog.

– Block spiders from all parts of website

User-agent: *

Disallow: /

– Allow spiders to access all parts of website

User-agent: *

Disallow:

– Block spiders from specific part of website (say files folder)

User-Agent: *

Disallow: /files/

– Block spiders from accessing specific file (say abc.html)

User-Agent: Googlebot

Disallow: /files/abc.html

Besides above, there are number of other commands for specific blocking or access functionality for different parts of website.

2. Confused… what should be final robots.txt file

Learning about different commands can be overwhelming for an average user. If you do not want to block search engine spiders (which is recommended), then use following as your robots.txt file.

sitemap: http://www.websitename.com/sitemap.xml

User-agent: *

Disallow:

Make sure you change the sitemap URL for your blog. Blogger blogs have default sitemap, while wordpress and other users can use tools / plugins to generate sitemap for the blog.

After changing sitemap url, paste these 3 lines in a notepad file (and save as robots.txt). Then upload this file to your root folder, so that can access it like www.websitename.com/robots.txt

3. Tools to Generate robots.txt file

There are number of third party free tools to generate robot.txt files. Users with basic understanding of commands for allow or block can use these tools for quick creation of customized robots.txt file.

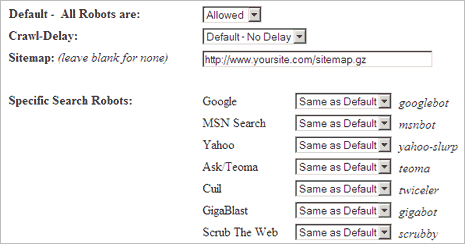

Online Robots.txt Generator at Mcanerin website provide neat user interface to generate robots.txt file. To get started, enter your sitemap url and then select different allow bots as per requirement and click ‘create robots.txt’ button to create final robots.txt file.

ROBOTStxt editor is a portable download-able tool to generate robots.txt file. Select parameters from drop down menu and click save button to generate customized robots.txt file.

Final thoughts, robots.txt file is not mandatory requirement for every blog or website. However, it is recommended to have robots.txt file to provide direction to search engine bots and spiders on what to index and what not to index!